| Version 4 (modified by , 9 years ago) (diff) |

|---|

The Basics

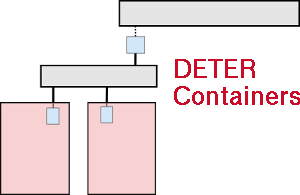

The neo-containers system uses cloud-container technology to abstract and generalize container creation and initialization. There is a per-experiment control node that centralizes configuration details. At the DETER level, the experiments have the control node and a number of "pnodes" which serve as hosts for the virtualized containers. The control node configures the pnodes as well as the containerized nodes as well as itself. The bootstrap script is run on the control node and: 1) installs configuration software on itself, 2) updates its own configuration, 3) updates the configuration of the other physical nodes (which includes virtual networking and starting virtual machines), 4) give configuration information to virtual nodes and allows them to configuration themselves.

Execution Flow

The execution flow of is as follows. The initialization uses the existing containers system as a bootstrap.

- Create a containerized experiment with an NS file and the

/share/containers/containerize.pyscript. - Modify the generated NS file.

- Change the OS type of the pnodes to

PNODE-BASE. e.g. make the line in the NS file:tb-set-node-os ${pnode(0000)} PNODE-BASE - Add a new control node. Traditionally it's been called "config", but there is no restriction on the name. Add this to the NS file:

set config [$ns node] tb-set-node-os ${config} Ubuntu1404-64-STD tb-set-hardware ${config} MicroCloud tb-set-node-failure-action ${config} "nonfatal" - Remove the

tb-node-set-startcmdline. This starts the existing DETER containers system on the nodes. We do not want that.

- Change the OS type of the pnodes to

- Swap in the experiment.

- Run the bootstrap script on your {{config}} node.

The bootstrap script:

- Uses the NFS mounted dir /share/chef/chef-packages to install Chef server and Chef client on the

confignode.- The

confignode is both a Chef server (talks to all machines in the experiment) and a Chef client/workstation (holds the Chef git repo which contains all configuration scripts).

- The

- Configures Chef Server on the

confignode.- Simply runs the chef self-configure scripts.

- Configures Chef client/workstation on the

confignode.- creates a chef-deter identity and organization (keys and names) for the Chef Server.

- installs

giton theconfignode. - git clones the canonical Chef repository from the NFS mounted dir

/share/chef/chef-repo. Thie repo contains all configuration scripts and data for the system. - upload all Chef recipes, data bags, and roles (configuration scripts, data, and roles) to the Chef server on

config(localhost).

- Create an experiment-specific "data bag" and upload it to the Chef Server. The data contains experiment name, project, group, name and address of the

configmachine, and the name and address of the RESTful configuration server. This is per-experiment dynamic data and thus is generated at run time instead of statically kept in the chef repository. - Register the

confignode (localhost) as a Chef client to the server, download and execute all local configuration recipes that exist in the {{config_server}} chef role. (A chef role is simply a collection of recipes.) Details of these are in the next section.

The chef repository defines three roles in the system, config_server, pnode, and container. The code that defines the roles can be found in the ./roles directory in the chef repository.

config_server recipes

Recipes are chunks of Ruby code that is are a mixture of Ruby and the Chef configuration definition language. A recipe is downloaded from a Chef server and run when chef-client is run on a node. This section describes the recipes that are run on the config_server machine itself. These recipes configure Chef communications on the nodes and tell the pnodes to configure themselves. (The pnode role recipe section is next.)

These recipes can be found in the ./cookbooks/config_server/recipes directory in the chef repository.

The config_server role consists of the following recipes, executed in order: config_db, config_server, hosts, bootstrap_pnodes, and configure_containers.

config_db- This recipe builds the configuration database from existing containers and DETER/emulab files found on the local machine. This database contains all the configuration information to configure the experiment. This database is RESTfully served by theconfig_serverprocess started elsewhere. The recipe also installs required packages needed to build the database (python3 and SQLAlchemy).

config_server- This recipe installs and starts theconfig_serverprocess. This server RESTfully serves configuration data to anyone in the experiment that requests it. After installing required packages, the recipe simply calls the standardsetup.pyscript in the config_server package to install it. It then copies a local/etc/initstyle script from the chef repository to/etc/init.d/config_server, then invokes the it, starting the daemon. At this point configuration information requests can be made onhttp://config:5000/...to get configuration information.

hosts- This recipe (which actually lives in thepnodecookbook directory, adds container node names and address information to/etc/hosts. This is a required step for later when theconfig_servernode connects to the containers.

bootstrap_pnodes- This recipe connects to all pnode machines and configures them as chef clients to the new chef server. It then sets the default role for the pnode machines to thepnoderole. Finally it connects to all the pnode machines and invokeschef-clientlocally. This kicks off the next round of configuration in the system - all pnode machines configure themselves. These recipes are described in the next section.

configure_containers- This recipe simply uses the chef toolknifeto connect to all (now running) containers and executeschef-client, which causes the containers to reach out to the chef server and request recipes/roles to execute. After this the containers are fully configured and integrated into DETER.

pnode recipes

The pnode role consists of the following recipes, executed in order: uml-net-group, mount_space, hosts, diod, vde, and vagrant.

uml-net-group- This recipe creates auml-netgroup. This is just a stop-gap recipe which is needed due to a broken apt database in the PNODE-BASE image. This recipe will be removed from the role and the chef repository once the PNODE-BASE image is rebuilt.

mount_space- This recipe formats and mounts the/dev/sda4partition on the disk. This is subsequently used to store and run containers images, the chef repository, etc.

hosts- This recipe creates entries in the pnode's/etc/hostsfor all container nodes.

diod- This recipe installs, configures, and starts thedioddaemon. The pnode has users home directories and other directories mounted locally. The containers also need these mounted to be fully integrated with DETER. Unfortunately it is not possible to use standard NFS mounting on directories that are themselves NFS mounted. (There are good reasons for this.) So the pnode usesdiodto serve these directories to the container nodes. The recipe uses standard package tech to installdiodand configures diod to export all directories that are locally mounted - which is just what we want. It then starts the daemon.

vde- This recipe installs and configuresVDE, Virtual Distributed Ethernet on the pnode. It installs VDE2 and required packages (uml-utilities and bridge-utils). It installs a /etc/init style script to/etc/init.d/vde_switchto control the VDE daemon. It requests all virtual networking configuration information from theconfig_serverand creates a number of VDE configuration files in/etc/vde2/conf.d, one for each switch needed. The recipe creates and configures all the TAP devices and bridges needed by the VDE switches. It then calls the/etc/init.d/vde2script to start all switches. At this point all local network plumbing is there and working.

vagrant- This recipe installs and configuresvagrant. Vagrant is a front-end for configuring and spawning virtual machines. it supports many different virtual machine image (qemu, LXE, VirtualBox?, etc), but the current neo-containers system only uses VirtualBox? images. (It has a few default images already configured and installed on the PNODE-BASE DETER image.) The recipe installsvagrantandvirtualbox(although they are already installed on the PNODE-BASE image, so this is really a NOOP command. After that, it's big job is to create theVagrantfilefile in/space/vagrantthat describes the container images and basic configuration. TheVagrantfilecreated does a few basic things: configure virtual NICs, setup basic networking so the containers route correctly to the DETER control net, and invoke a basic NOOP chef configuration that *only* register the container node with the chef server. It does not invokechef-clienton the containers to configure the nodes, that happens next. The recipe also sets up a few things on the pnode for smoother vagrant operations: avagrantuser and home directory at/vagrantare created, an/etc/profile.d/vagrant.shfile is created with vagrant-specific environment variables, Virtual Box is configured to not store disk image copies on NFS mounted dirs, but in/space/vagrant. Finally/etc/init.d/vagrantis invoked to spawn the containers. At this point, the containers are running, but not configured/integrated into DETER (no user accounts, mounts, etc).

container recipes

The container role consists of the following recipes: apt, hosts, groups, accounts, mounts. All these recipes work to integrate the container into the running DETER experiment.

apt- Runsapt cleanandapt updateon the nodes. It would be nice to get rid of this recipe as it will slam the DETER apt repository server. Need to investigate why and if this is still needed.

hosts- Adds container names and addresses to/etc/hostsso all containers can resolve each others' names.

groups- Requests group information from theconfig_serverand creates the DETER groups on the container.

accounts- Requests user account information from theconfig_serverand recreates the accounts locally on the container. This includes creating mount points for the user home directories.

mounts- This recipe installs the client sidediodpackage and configures it to talk to the local pnode on which the container runs. It requests mount information from theconfig_serverand mounts what it finds viadiod.