| | 107 | openvz_template_dir:: |

| | 108 | The directory that stores openvz template files. Default: {{{%(exec_root)s/images/}}} (that is the {{{images}}} directory in the {{{exec_root}}} directory defined in the site config file. |

| | 109 | |

| | 110 | == Container Notes == |

| | 111 | |

| | 112 | Different container types have some quirks. This section lists limitations of each container. |

| | 113 | |

| | 114 | === Openvz === |

| | 115 | |

| | 116 | Openvz containers use a custom OS image to support their virtualization. They cannot share physical resources with other containers. A physical node holding openvz containers holds only openvz containers. They are interconnected with one another through bridges and kernel virtual networking rather than through VDE switches (as qemu and process containers are)). As a result, openvz containers provide network delays using per-container endpoint traffic shaping. This means that they cannot correctly interconnect with traffic sphped qemu nodes. |

| | 117 | |

| | 118 | === Interconnections: VDE switches and local networking === |

| | 119 | |

| | 120 | The various containers are interconnected using either local kernel virtual networking or [http://wiki.virtualsquare.org/wiki/index.php/VDE VDE switches]. Kernel networking is lower overhead because it does not require process context switching, but VDE switches are a more general solution. |

| | 121 | |

| | 122 | Network behavior changes - loss, delay, rate limits - are introduced into a network of containers using one of two mechanisms: inserting elements into a VDE switch topology or end node traffic shaping. Inserting elements into the VDE switch topology allows the system to modify the behavior for all packets passing through it. Generally this means all packets to or from a host, as the container system inserts these elements in the path between the node and the switch. |

| | 123 | |

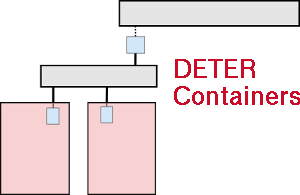

| | 124 | This figure shows 3 containers sharing a virtual LAN on a VED switch with no traffic shaping: |

| | 125 | |

| | 126 | |

| | 127 | |

| | 128 | Openvz containers are interconnected through kernel networking directly to support their high efficiency, and because talking to another container implies leaving the physical machine. They induce network delays through [http://www.linuxfoundation.org/collaborate/workgroups/networking/netem end node traffic shaping]. |

| | 129 | |

| | 130 | Qemu nodes can support either end node tra |