| Version 6 (modified by , 12 years ago) (diff) |

|---|

Table of Contents

Reference Guide

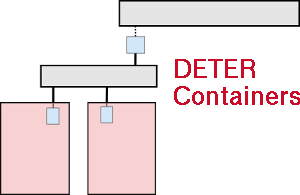

This document describes the details of the commands and data structures that make up the containers system. The User Guide /Tutotial? provides useful context about the workflows and goals of the system that inform these technical details.

containerize.py

The containerize.py command creates a DETER experiment made up of containers. The containerize.py program is available from /share/containers/containerize.py on users.isi.deterlab.net. A sample invocation is:

$ /share/containers/containerize.py MyProject MyExperiment ~/mytopology.tcl

It will create a new experiment in MyProject called MyExperiment containing the experiment topology in mytopology.tcl. All the topology creation commands supported by DETER are supported by the conatainerization system, but emulab/DETER program agents are not. Emulab/DETER start commands are supported.

Containers will create an experiment in a group if the project parameter is of the form project/group. To start an experiment in the testing group of the DETER project, the first parameter is specified as DETER/testing.

Either an ns2 file or a topdl description is supported. Ns2 descriptions must end with .tcl or .ns. Other files are assumed to be topdl descriptions.

By default, containerize.py program will partition the topology into openvz containers, packed 10 containers per physical computer. If the topology is already partitioned - at least one element has a conatiners::partition atttribute - containerize.py will not partition it. The --force-partition flag causes containerize.py to partition the experiment regardless of the presence of containers:partition attributes.

If container types have been assigned to nodes using the containers:node_type attribute, containerize.py will respect them. Valid container types for the containers:node_type attribute or the --default-container parameter are:

| Parameter | Container |

embedded_pnode | Physical Node |

qemu | Qemu VM |

openvz | Openvz Container |

process | ViewOS process |

The containerize.py command takes several parameters that can change its behavior:

--default-container=kind- Containerize nodes without a container type into kind. If no nodes have been assigned containers, this puts all them into kind containers.

--force-partition- Partition the experiment whether or not it has been paritioned already

--packing=int-

Attempt to put int containers into each physical node. The default

--packingis 10. --config=filename- Read configuration variables from filename the configuration values are discussed below.

--pnode-types=type1[,type2...]- Override the site configuration and request nodes of type1 (or type2 etc.) as host nodes.

--end-node-shaping- Attempt to do end node traffic shaping even in containers connected by VDE switches. This works with qemu nodes, but not process nodes. Topologies that include both openvz nodes and qemu nodes that shape traffic should use this. See the discussion below.

--vde-switch-shaping- Do traffic shaping in VDE switches. Probably the default, but that is controlled in the site configuration. See the discussion below.

--openvz-diskspace- Set the default openvz disk space size. The suffixes G and M stand for gigabytes and megabytes.

--openvz-template- Set the default openvz template. Templates are described in the users guide.

--image- Construct a visualization of the virtual topology and leave it in the experiment directories (default)

--no-image- Do not construct a visualization of the virtual topology and leave it in the experiment directories

--debug- Print additional diagnostics and leave failed DETER experiments on the testbed

--keep-tmp- Do not remove temporary files - for debugging only

This invocation:

$ ./containerize.py --packing 25 --default-container=qemu --force-partition DeterTest faber-packem ~/experiment.xml

takes the topology in ~/experiment.xml (which must be topdl), packs it into 25 qemu containers per physical node, and creates an experiment called DeterTest/faber-packem that can be swapped in. If experiment.xml were already partitioned, it will be re-partitioned. If some nodes in that topology were assigned to openvz nodes already, those nodes will be still be in openvz containers.

The result of a successful containerize.py run is a DETER experiment that can be swapped in.

More detailed examples are available in the tutorial

Site Configuration File

The site configuration file is an attribute value pair file parsed by a python ConfigParser that sets overall container parameters. Many of these have legacy internal names.

The default site configuration is in /share/containers/site.conf on users.isi.deterlab.net.

Acceptable values (and their DETER defaults) are:

- backend_server

-

The IRC server used as a backend coordination service for grandstand. Will be replaced by MAGI. Default:

boss.isi.deterlab.net:6667 - grandstand_port

-

Port that third party applications can contact grandstand on. Will be replaced by MAGI. Default:

4919 - maverick_url

-

Default image used by qemu containers. Default:

http://scratch/benito/pangolinbz.img.bz2 - url_base

-

Base URL of the DETER web interface on which users can see experiments. Default:

http://www.isi.deterlab.net/ - qemu_host_hw

-

Hardware used by containers. Default:

pc2133,bpc2133,MicroCloud - xmlrpc_server

-

Host and port from which to request experiment creation. Default:

boss.isi.deterlab.net:3069 - qemu_host_os

-

OSID to request for qemu container nodess. Default:

Ubuntu1204-64-STD - exec_root

-

Root of the directory tree holding containers software and libraries. Developers often change this. Default:

/share/containers - openvz_host_os

-

OSID to request for openvz nodes. Default

CentOS6-64-openvz - openvz_guest_url

-

Location to load the openvz template from. Default:

%(exec_root)s/images/ubuntu-10.04-x86.tar.gz - switch_shaping

-

True if switched containers (see below) should do traffic shaping in the VDE switch that connects them. Default:

true - switched_containers

-

A list of the containers that are networked with VDE switches. Default:

qemu,process - openvz_template_dir

-

The directory that stores openvz template files. Default:

%(exec_root)s/images/(that is theimagesdirectory in theexec_rootdirectory defined in the site config file.

Container Notes

Different container types have some quirks. This section lists limitations of each container, as well as issues in interconnecting them.

Qemu

Qemu nodes are limited to 7 experimental interfaces. They currently run only Ubuntu 12.04 32 bit operating systems.

ViewOS Processes

These have no way to log in or work as conventional machines. Process tree rooted in the startcommand is created, so a service will run with its own view of the network. It does not have an address on the control net.

Because of a bug in their internal routing, multi-homed processes do not respond correctly

Interconnections: VDE switches and local networking

The various containers are interconnected using either local kernel virtual networking or VDE switches. Kernel networking is lower overhead because it does not require process context switching, but VDE switches are a more general solution.

Network behavior changes - loss, delay, rate limits - are introduced into a network of containers using one of two mechanisms: inserting elements into a VDE switch topology or end node traffic shaping. Inserting elements into the VDE switch topology allows the system to modify the behavior for all packets passing through it. Generally this means all packets to or from a host, as the container system inserts these elements in the path between the node and the switch.

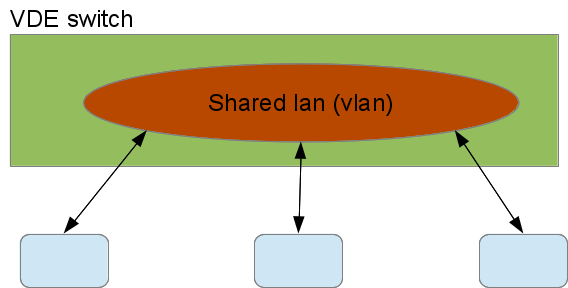

This figure shows 3 containers sharing a virtual LAN (VLAN) on a VDE switch with no traffic shaping:

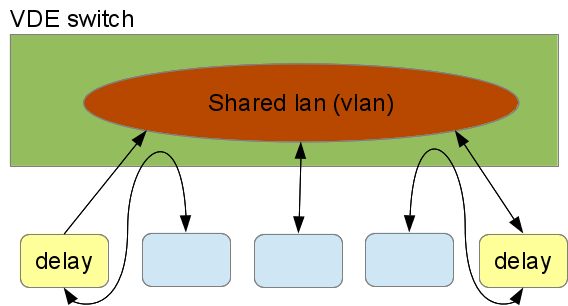

The blue containers connect to the switch and the switch has interconnected their VDE ports into the red shared VLAN. To add delays to tow of the nodes on that VLAN, the following VDE switch configuration would be used:

The VDE switch connects the containers with shaped traffic to the delay elements, not to the shared VLAN. The delay elements are on the VLAN and delay all traffic passing through them. The container system configures the delay elements to delay traffic symmetrically - traffic from the LAN and traffic from teh container are both delayed. The VDE tools can be configured asymmetrically as well. This is a very flexible way to interconnect containers.

That flexibility incurs a cost in overhead. Each delay element and the VDE switch is a process, do traffic passing from one delayed nodes to the other experiences 7 context switches: container -> switch, switch -> delay, delay -> switch, switch -> delay, delay -> switch, and switch -> container.

The alternative mechanism is to do the traffic shaping inside the nodes, using linux traffic shaping. In this case, traffic outbound from a container is delayed in the container for the full transit time to the next hop. The next node does the same. End-node shaping all happens in the kernel so it is relatively inexpensive at run time.

Qemu nodes can make use of either end-node shaping or VDE shaping, and use VDE shaping by default. The --end-node-shaping and --vde-switch-shaping options to containerize.py forces the choice in qemu.

ViewOS processes can only use VDE shaping. Their network stack emulation is not rich enough to include traffic shaping.

Openvz nodes only use end-node traffic shaping. They have no native VDE support so interconnecting openvz containers to VDE switches would include both extra kernel crossings and extra context switches. Because a primary attraction of VDE switches is their efficiency, the containers system does not implement VDE interconnections to openvz.

Similarly embedded physical nodes use only endnode traffic shaping, as routing outgoing traffic through a virtual switch infrastructure that just connects to its physical interfaces is at best confusing.

Unfortunately, endnode traffic shaping and VDE shaping are incompatible. Because end node shaping does not impose delays on arriving traffic, it cannot delay traffic from a VDE delayed node correctly.

This is primarily of academic interest, unless a researcher wants to impose traffic shaping between containers using incompatible traffic shaping. There needs to be an unshaped link between the two kinds of traffic shaping.

Attachments (3)

-

Unshaped.png (26.1 KB) - added by 12 years ago.

Unshaped traffic through a VDE switch

-

shaped.png (35.9 KB) - added by 12 years ago.

Traffic sahping using VDE

-

viewos.png (24.2 KB) - added by 12 years ago.

Viewos ping diagram

Download all attachments as: .zip